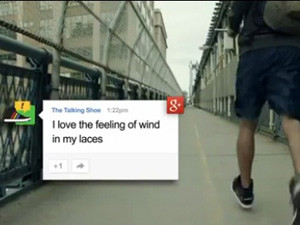

Google has shown off another one of its wearable device prototypes at the annual SXSW conference, and this time it's a talking shoe - with attitude.

The shoe is a modified Adidas high-top which is fitted with a pressure sensor, accelerometer and gyroscope, as well as a microcontroller, Bluetooth connectivity and a speaker.

When paired to the wearer's smartphone via Bluetooth, the shoes can detect movement, or lack thereof, and provide spoken feedback accordingly. Not all feedback will be the same though, as the shoes can be given different "personalities". So if the shoe has a lazy personality, it will start shouting at the wearer if he/she starts to run. If the shoe has an active personality, it will cheer the wearer on for getting active.

A Google representative at SXSW told Engadget: "The whole reason why we're doing this, and making this come to life, is to show what happens when you take a lot of data and use it to tell interesting, funny, relevant stories in new and different ways."

Google has emphasised it is not looking to break into the shoe business: "It's more about showing what is possible with data and showing off an early prototype of what that might look like."

The talking shoe is part of the search giant's "Art, Copy and Code" project, which focuses on connected objects. It's all about how you might take everyday objects, like shoes, and connect them to the Web and use all that data to tell stories."

Google Glass

One of the more realistic wearable devices to grab attention at Google's display at the conference was Google Glass. Developer preview versions of Google Glass are already available for $1 500, and Google has previously said it is aiming to officially launch the product in early 2014.

Before Google Glass has even become widely available though, some major privacy concerns have already been raised. This weekend, a Seattle Dive Bar announced it was banning Google Glass from its premises. It has been noted this will most likely be the first of many businesses to ban the heads-up display glasses.

The dive bar's owner, Dave Meinert, says the ban has been issued because it is a private place where taking unwanted photos or video recordings are not allowed. Google has responded saying only: "It is still very early days for Glass, and we expect that as with other new technologies, such as cellphones, behaviours and social norms will develop over time."

Google recently released a video that demonstrated the user interface and some of the key features of Google Glass. The UI relies heavily on voice-control, and users can take voice-controlled photos and videos, send and receive onscreen directions, search the Web and get onscreen translation support.

Along with the video, Google encouraged public participation through the "If I Had Glass" campaign and a "Glass Explorer Program". Now the first third-party applications for Google Glass are starting to emerge.

According to a NewScientist report, a new app built for Google Glass, which is part funded by Google itself, aims to help users find people in crowds based on the clothes they are wearing or their so-called "fashion fingerprint".

InSight, developed by Srihari Nelakuditi at the University of South Carolina together with Romit Roy Choudhury and colleagues at Duke University, is premised on the fact that facial recognition technology is not enough for recognising people in crowds, because it is unlikely that the person will be looking at the headset's camera.

The report explains the "fashion fingerprint" is constructed by a smartphone app "which snaps a series of photos of the user as they read Web pages, e-mails or tweets. It then creates a file - called a spatiogram - that captures the spatial distribution of colours, textures and patterns (vertical or horizontal stripes, say) of the clothes they are wearing. This combination of colour, texture and pattern analysis makes someone easier to identify at odd viewing angles or over long distances."

Nelakuditi explains that privacy is maintained since the fingerprint changes when a person changes their outfit: "A person's visual fingerprint is only temporary, say for a day or an evening."

Early tests have seen the InSight app accurately identify people in a test group 93% of the time. A full paper on the application by Duke University can be viewed here.

Share